Table of Contents

Workflow

- 1. PIL (Pillow): Open an Image

- 2. OpenCV: Change an Image

- 3. Tessereact (PyTessereact): OCR an Image

Install the Libraries

PIL (Pillow)

Install: https://pillow.readthedocs.io/en/stable/installation/basic-installation.html

pip install --upgrade Pillow

Document: https://pillow.readthedocs.io/en/stable/reference/Image.html

OpenCV

Install: https://pypi.org/project/opencv-python

pip install opencv-python

Tessereact

Install Tessereact: https://guides.library.illinois.edu/c.php?g=347520&p=4121425

% brew install tesseract-lang % tesseract --version tesseract 5.4.1 leptonica-1.84.1 libgif 5.2.1 : libjpeg 8d (libjpeg-turbo 3.0.0) : libpng 1.6.43 : libtiff 4.6.0 : zlib 1.2.12 : libwebp 1.4.0 : libopenjp2 2.5.2 Found NEON Found libarchive 3.7.4 zlib/1.2.12 liblzma/5.4.6 bz2lib/1.0.8 liblz4/1.9.4 libzstd/1.5.6 Found libcurl/8.7.1 SecureTransport (LibreSSL/3.3.6) zlib/1.2.12 nghttp2/1.61.0 (jupyter) tungnt@MacBook-Pro-cua-Nguyen-2 jupyter %

Install pytesseract: https://pypi.org/project/pytesseract

pip install pytesseract

Language:

print(pytesseract.get_languages(config='.')) ['afr', 'amh', 'ara', 'asm', 'aze', 'aze_cyrl', 'bel', 'ben', 'bod', 'bos', 'bre', 'bul', 'cat', 'ceb', 'ces', 'chi_sim', 'chi_sim_vert', 'chi_tra', 'chi_tra_vert', 'chr', 'cos', 'cym', 'dan', 'deu', 'div', 'dzo', 'ell', 'eng', 'enm', 'epo', 'equ', 'est', 'eus', 'fao', 'fas', 'fil', 'fin', 'fra', 'frk', 'frm', 'fry', 'gla', 'gle', 'glg', 'grc', 'guj', 'hat', 'heb', 'hin', 'hrv', 'hun', 'hye', 'iku', 'ind', 'isl', 'ita', 'ita_old', 'jav', 'jpn', 'jpn_vert', 'kan', 'kat', 'kat_old', 'kaz', 'khm', 'kir', 'kmr', 'kor', 'kor_vert', 'lao', 'lat', 'lav', 'lit', 'ltz', 'mal', 'mar', 'mkd', 'mlt', 'mon', 'mri', 'msa', 'mya', 'nep', 'nld', 'nor', 'oci', 'ori', 'osd', 'pan', 'pol', 'por', 'pus', 'que', 'ron', 'rus', 'san', 'sin', 'slk', 'slv', 'snd', 'snum', 'spa', 'spa_old', 'sqi', 'srp', 'srp_latn', 'sun', 'swa', 'swe', 'syr', 'tam', 'tat', 'tel', 'tgk', 'tha', 'tir', 'ton', 'tur', 'uig', 'ukr', 'urd', 'uzb', 'uzb_cyrl', 'vie', 'yid', 'yor'] from langdetect import detect_langs detect_langs(ocr_result_original) tesseract --list-langs

How to Open an Image in Python with PIL (Pillow)

https://github.com/1sitevn/python-jupyter/blob/main/ocr/01_OCR_Pillow.ipynb

import cv2 import pytesseract from PIL import Image image_path = "../data/ocr/page_01.jpg" image = Image.open(image_path) print(image.size) image.rotate(90).show() image.save("../data/temp/page_01.jpg")

How to Preprocess Images for Text OCR in Python

https://github.com/1sitevn/python-jupyter/blob/main/ocr/02_OCR_Preprocess_Images.ipynb

import cv2 import pytesseract import numpy as np from PIL import Image from matplotlib import pyplot as plt

Opening an Image

image_file = "../data/ocr/page_01.jpg" img = cv2.imread(image_file) def display(im_path): dpi = 80 im_data = plt.imread(im_path) height, width = im_data.shape[:2] # What size does the figure need to be in inches to fit the image? figsize = width / float(dpi), height / float(dpi) # Create a figure of the right size with one axes that takes up the full figure fig = plt.figure(figsize=figsize) ax = fig.add_axes([0, 0, 1, 1]) # Hide spines, ticks, etc. ax.axis('off') # Display the image. ax.imshow(im_data, cmap='gray') plt.show() display(image_file)

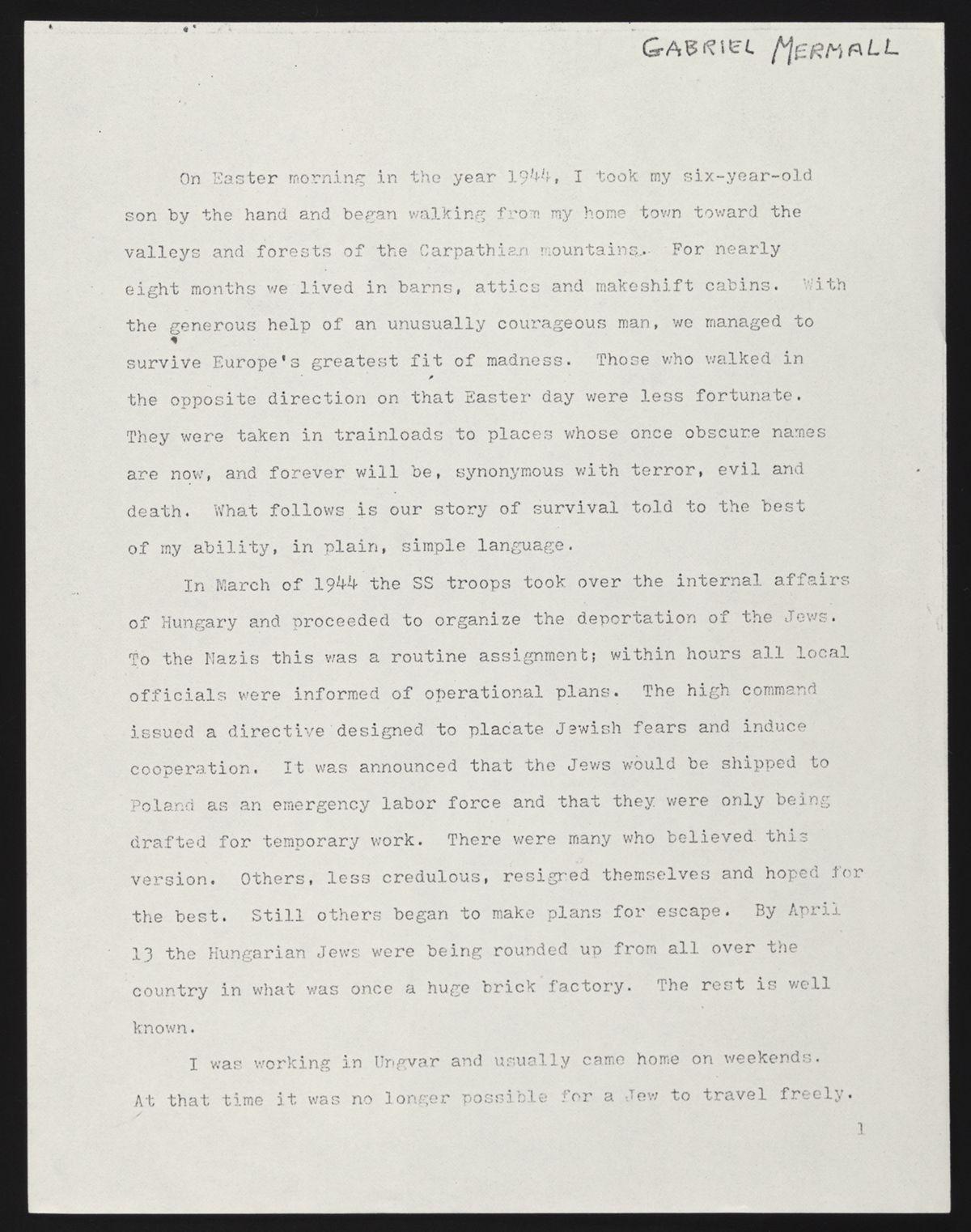

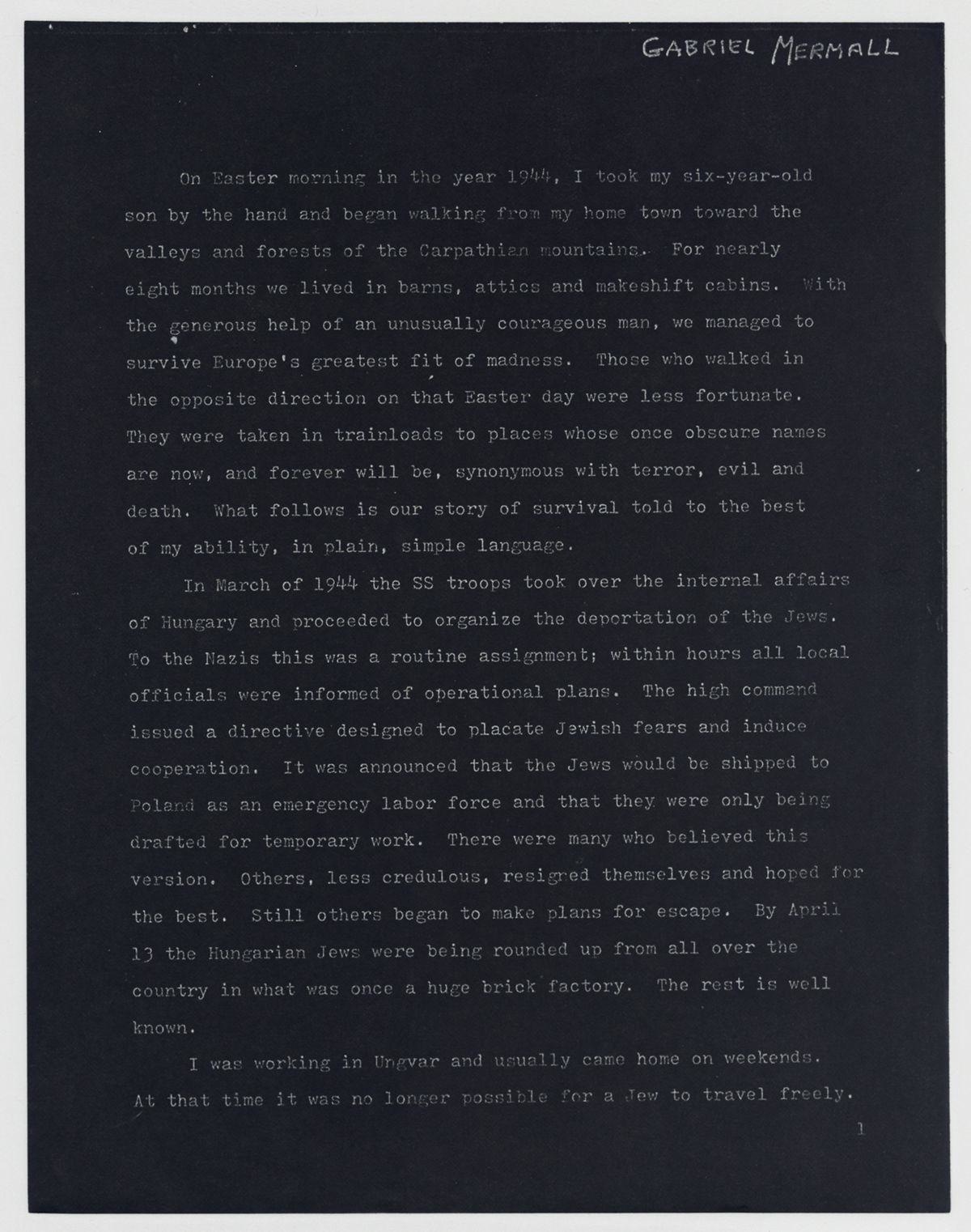

Inverted Images

inverted_image = cv2.bitwise_not(img) cv2.imwrite("../data/temp/inverted.jpg", inverted_image) display("../data/temp/inverted.jpg")

Rescaling

Binarization

def grayscale(image): return cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) gray_image = grayscale(img) cv2.imwrite("../data/temp/gray.jpg", gray_image) display("../data/temp/gray.jpg")

thresh, im_bw = cv2.threshold(gray_image, 210, 230, cv2.THRESH_BINARY) cv2.imwrite("../data/temp/bw_image.jpg", im_bw) display("../data/temp/bw_image.jpg")

Noise Removal

def noise_removal(image): kernel = np.ones((1, 1), np.uint8) image = cv2.dilate(image, kernel, iterations=1) kernel = np.ones((1, 1), np.uint8) image = cv2.erode(image, kernel, iterations=1) image = cv2.morphologyEx(image, cv2.MORPH_CLOSE, kernel) image = cv2.medianBlur(image, 3) return (image) no_noise = noise_removal(im_bw) cv2.imwrite("../data/temp/no_noise.jpg", no_noise) display("../data/temp/no_noise.jpg")

Dilation and Erosion

def thin_font(image): image = cv2.bitwise_not(image) kernel = np.ones((2,2),np.uint8) image = cv2.erode(image, kernel, iterations=1) image = cv2.bitwise_not(image) return (image) eroded_image = thin_font(no_noise) cv2.imwrite("../data/temp/eroded_image.jpg", eroded_image) display("../data/temp/eroded_image.jpg")

def thick_font(image): image = cv2.bitwise_not(image) kernel = np.ones((2,2),np.uint8) image = cv2.dilate(image, kernel, iterations=1) image = cv2.bitwise_not(image) return (image) dilated_image = thick_font(no_noise) cv2.imwrite("../data/temp/dilated_image.jpg", dilated_image) display("../data/temp/dilated_image.jpg")

Rotation / Deskewing

new = cv2.imread("../data/ocr/page_01_rotated.JPG") display("../data/ocr/page_01_rotated.JPG") def getSkewAngle(cvImage) -> float: # Prep image, copy, convert to gray scale, blur, and threshold newImage = cvImage.copy() gray = cv2.cvtColor(newImage, cv2.COLOR_BGR2GRAY) blur = cv2.GaussianBlur(gray, (9, 9), 0) thresh = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1] # Apply dilate to merge text into meaningful lines/paragraphs. # Use larger kernel on X axis to merge characters into single line, cancelling out any spaces. # But use smaller kernel on Y axis to separate between different blocks of text kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (30, 5)) dilate = cv2.dilate(thresh, kernel, iterations=2) # Find all contours contours, hierarchy = cv2.findContours(dilate, cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE) contours = sorted(contours, key = cv2.contourArea, reverse = True) for c in contours: rect = cv2.boundingRect(c) x,y,w,h = rect cv2.rectangle(newImage,(x,y),(x+w,y+h),(0,255,0),2) # Find largest contour and surround in min area box largestContour = contours[0] print (len(contours)) minAreaRect = cv2.minAreaRect(largestContour) cv2.imwrite("temp/boxes.jpg", newImage) # Determine the angle. Convert it to the value that was originally used to obtain skewed image angle = minAreaRect[-1] if angle < -45: angle = 90 + angle return -1.0 * angle # Rotate the image around its center def rotateImage(cvImage, angle: float): newImage = cvImage.copy() (h, w) = newImage.shape[:2] center = (w // 2, h // 2) M = cv2.getRotationMatrix2D(center, angle, 1.0) newImage = cv2.warpAffine(newImage, M, (w, h), flags=cv2.INTER_CUBIC, borderMode=cv2.BORDER_REPLICATE) return newImage # Deskew image def deskew(cvImage): angle = getSkewAngle(cvImage) return rotateImage(cvImage, -1.0 * angle) fixed = deskew(new) cv2.imwrite("../data/temp/rotated_fixed.jpg", fixed) display("../data/temp/rotated_fixed.jpg")

Removing Borders

display("../data/temp/no_noise.jpg") def remove_borders(image): contours, heiarchy = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cntsSorted = sorted(contours, key=lambda x:cv2.contourArea(x)) cnt = cntsSorted[-1] x, y, w, h = cv2.boundingRect(cnt) crop = image[y:y+h, x:x+w] return (crop) no_borders = remove_borders(no_noise) cv2.imwrite("../data/temp/no_borders.jpg", no_borders) display('../data/temp/no_borders.jpg')

Missing Borders

color = [255, 255, 255] top, bottom, left, right = [150]*4 image_with_border = cv2.copyMakeBorder(no_borders, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) cv2.imwrite("../data/temp/image_with_border.jpg", image_with_border) display("../data/temp/image_with_border.jpg")

Transparency / Alpha Channel

Introduction to PyTesseract

https://github.com/1sitevn/python-jupyter/blob/main/ocr/03_OCR_Introduction_To_PyTesseract.ipynb

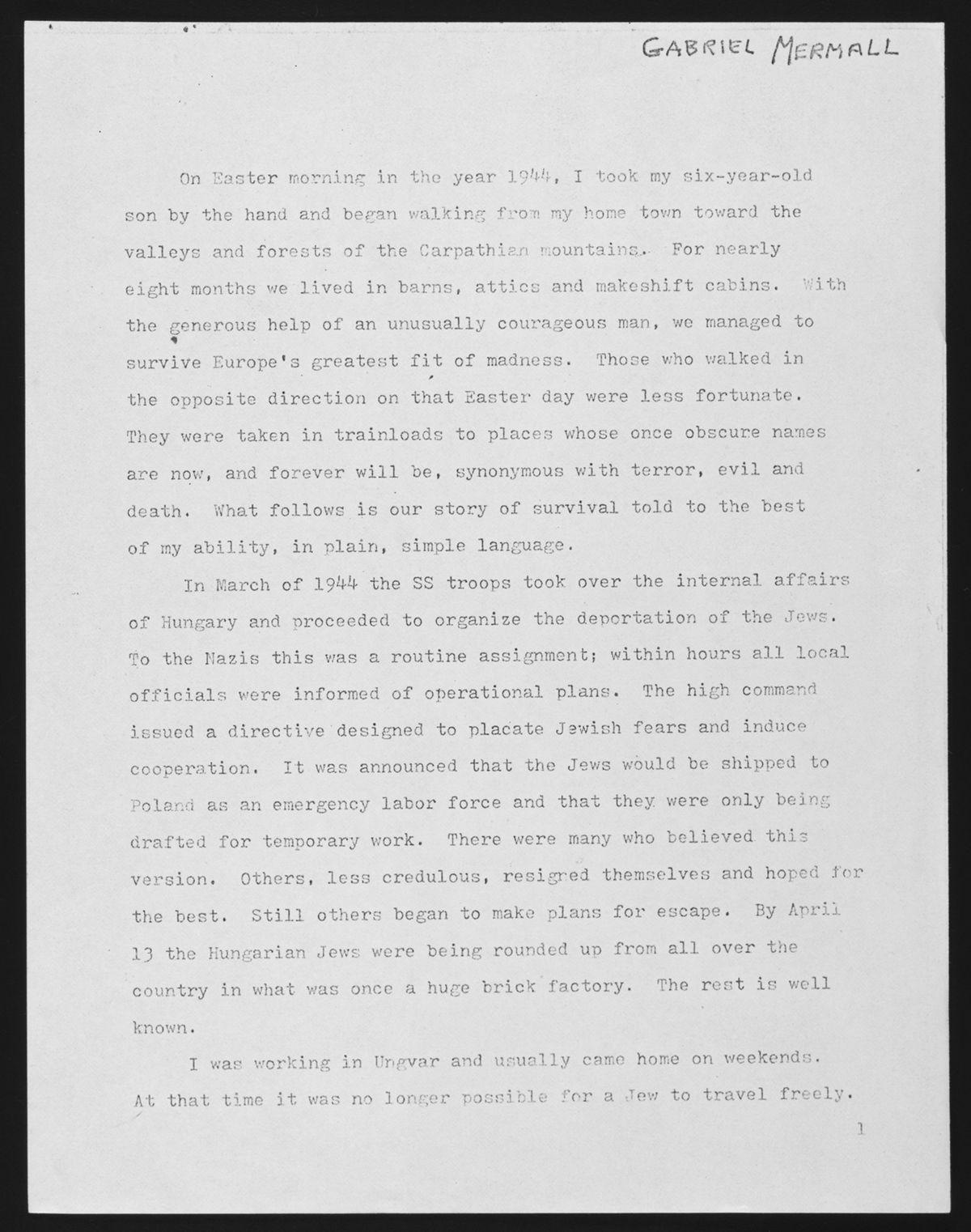

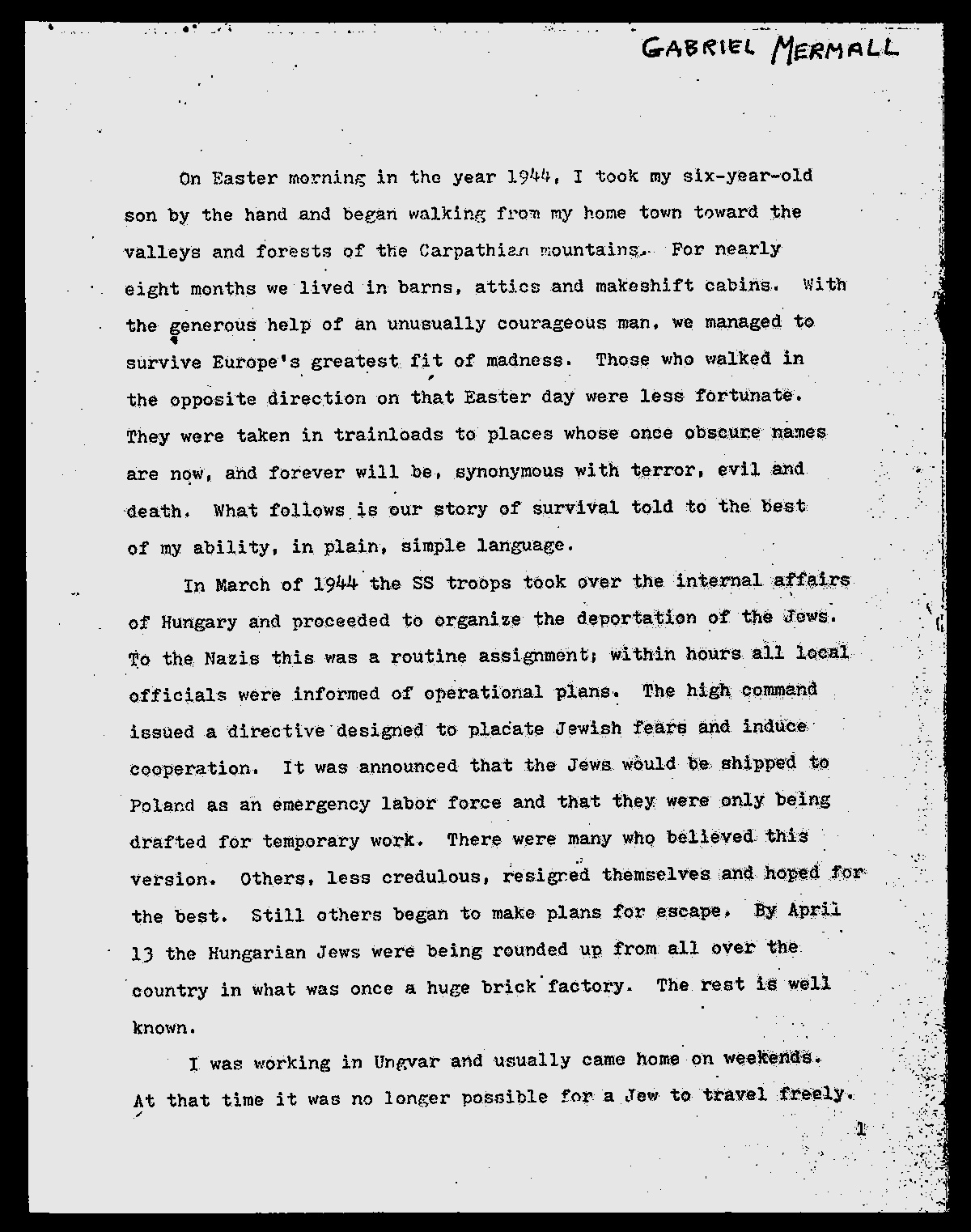

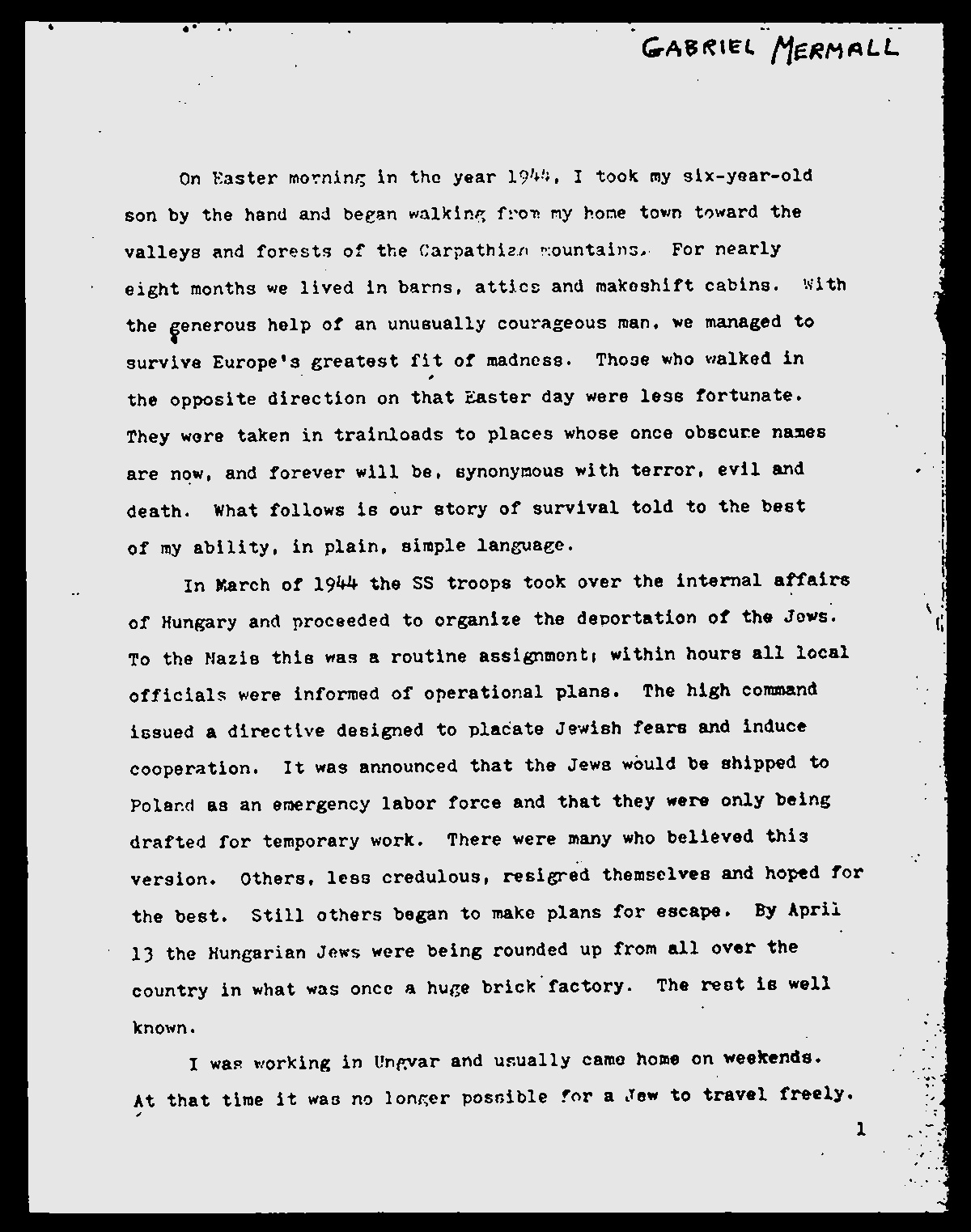

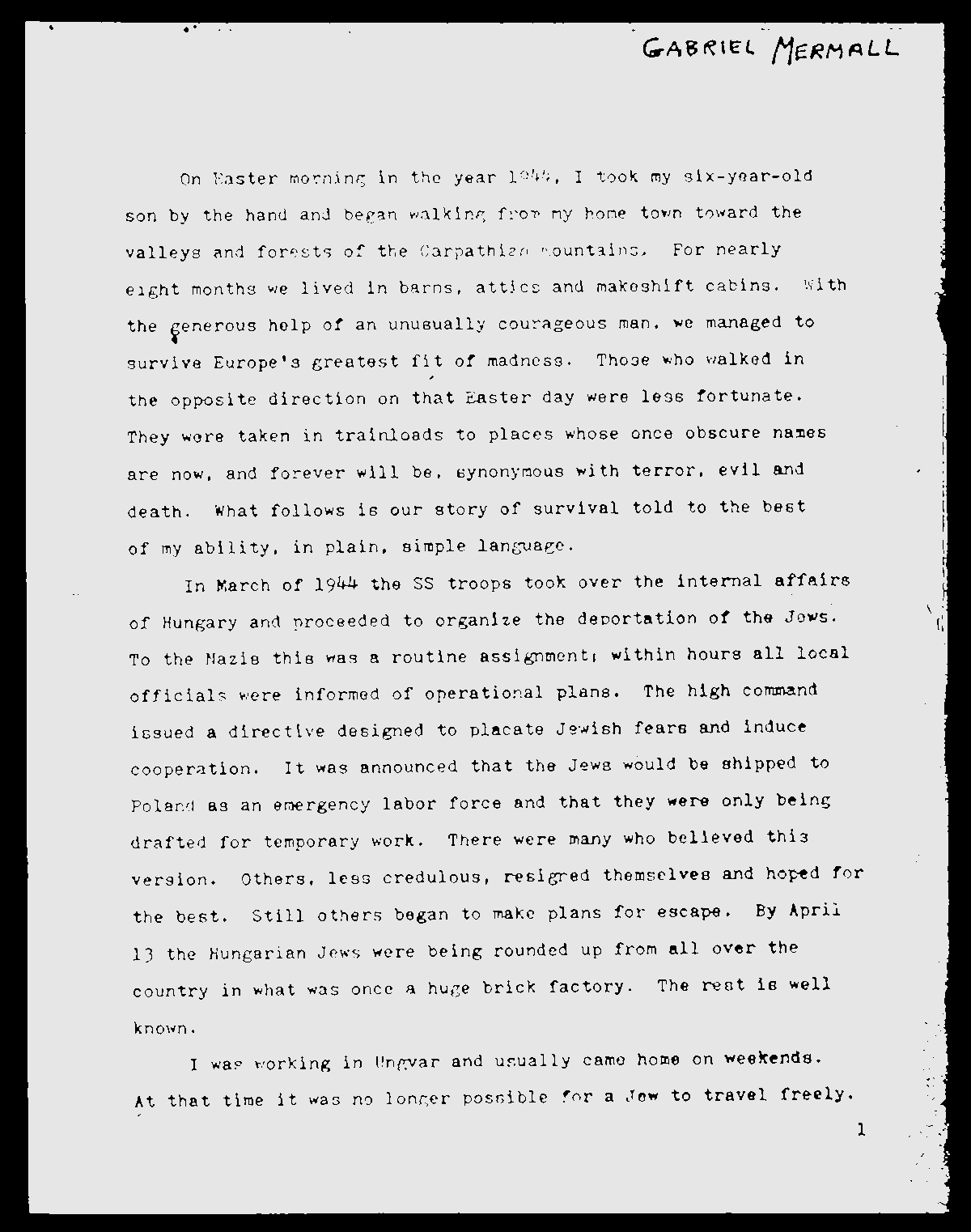

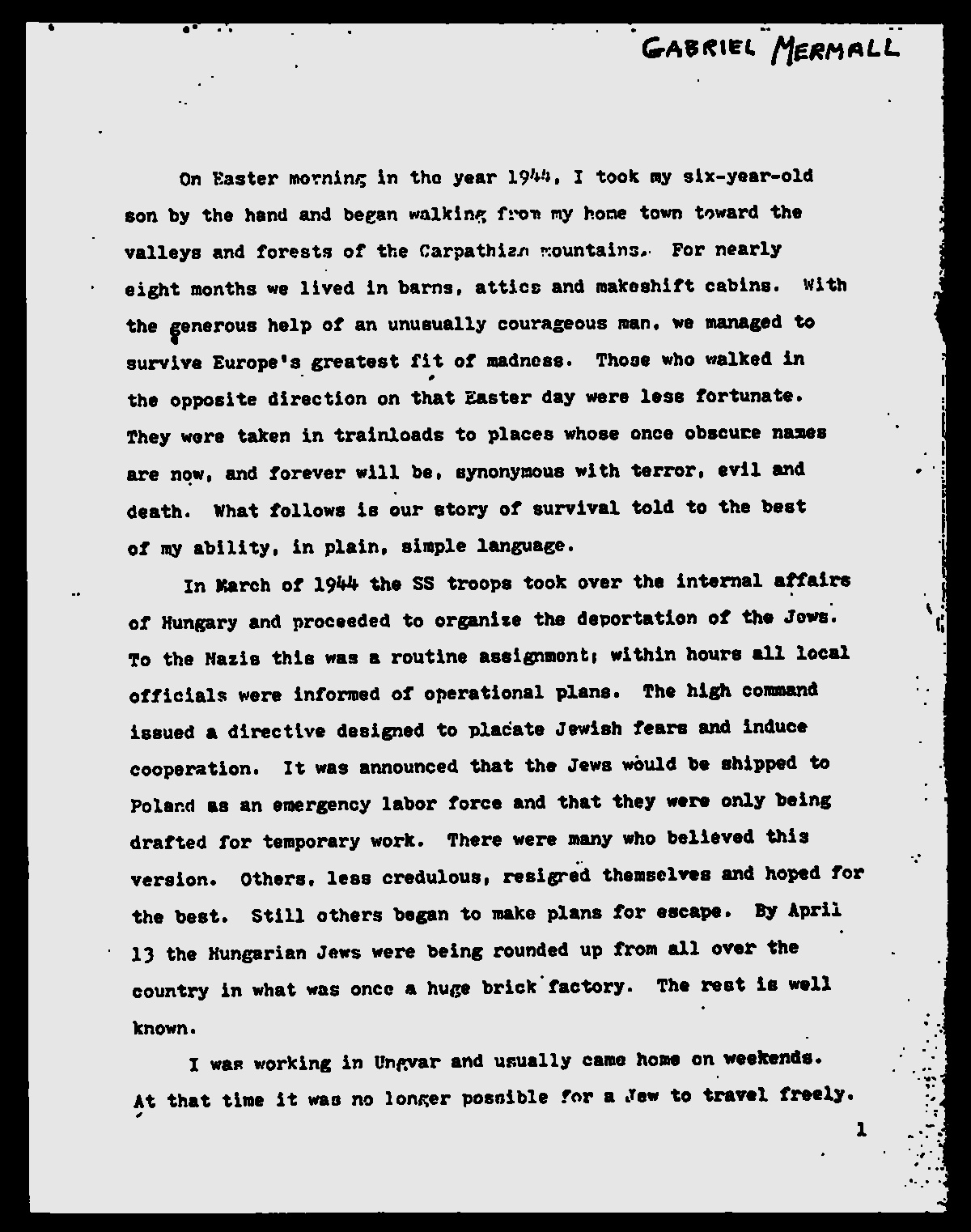

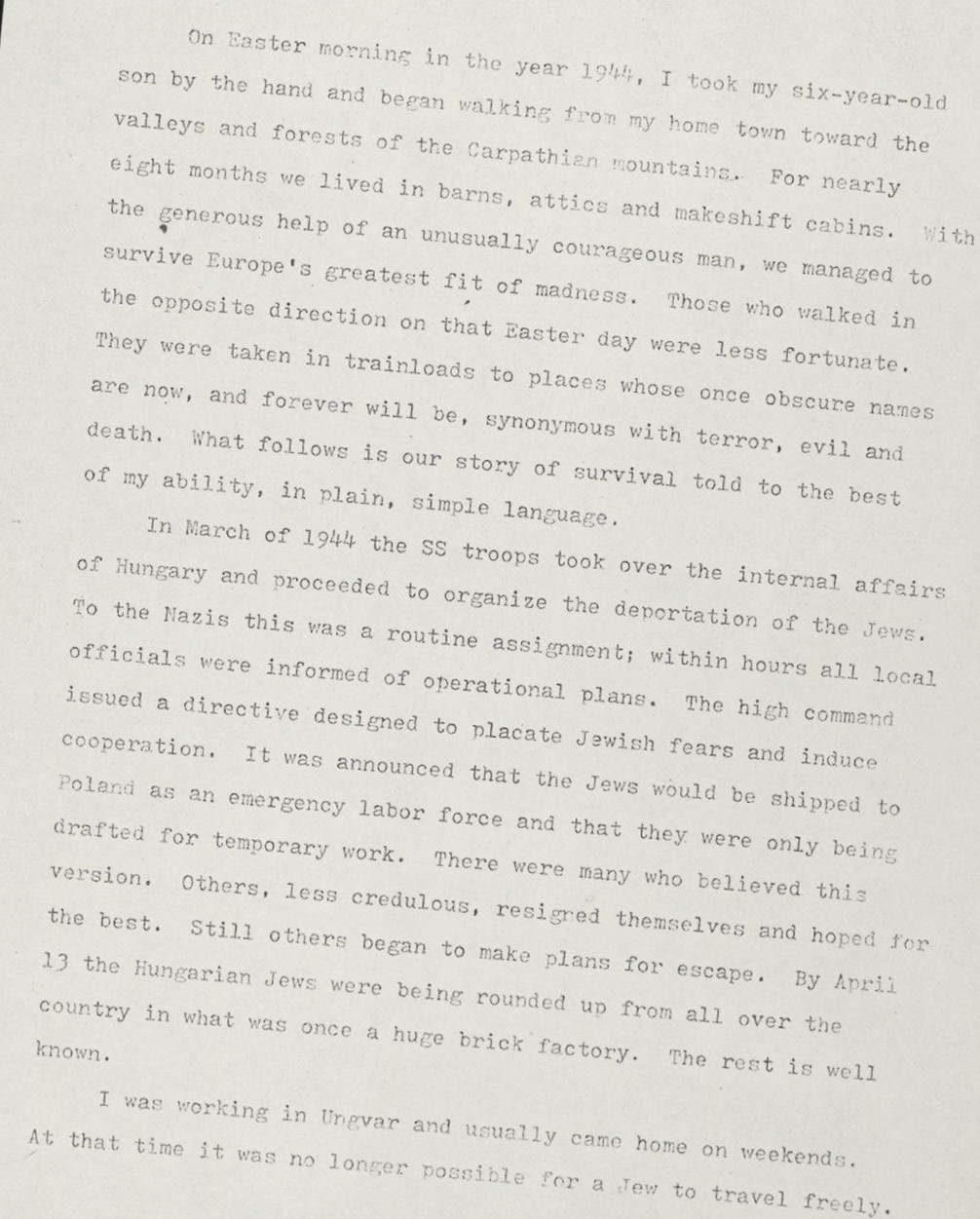

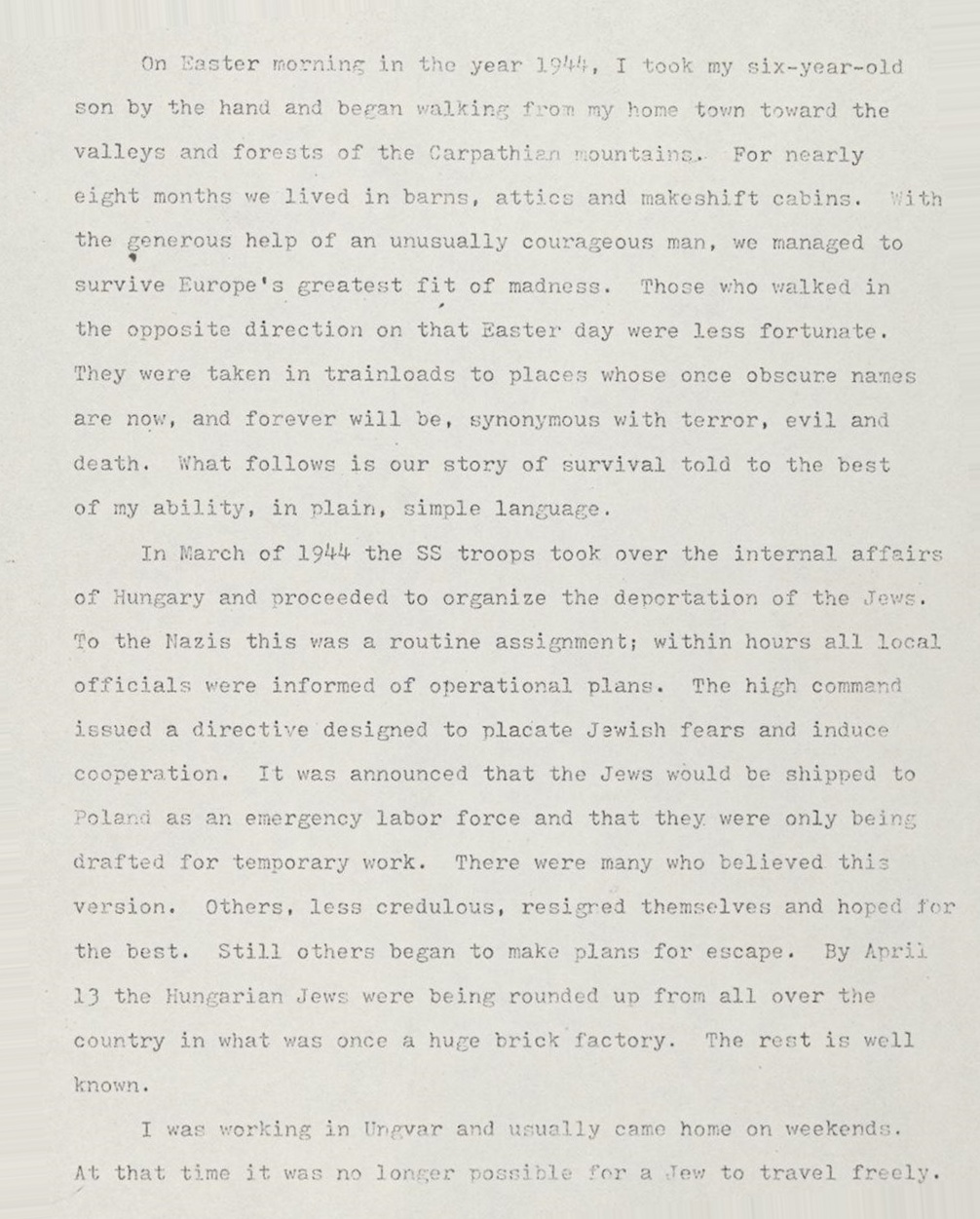

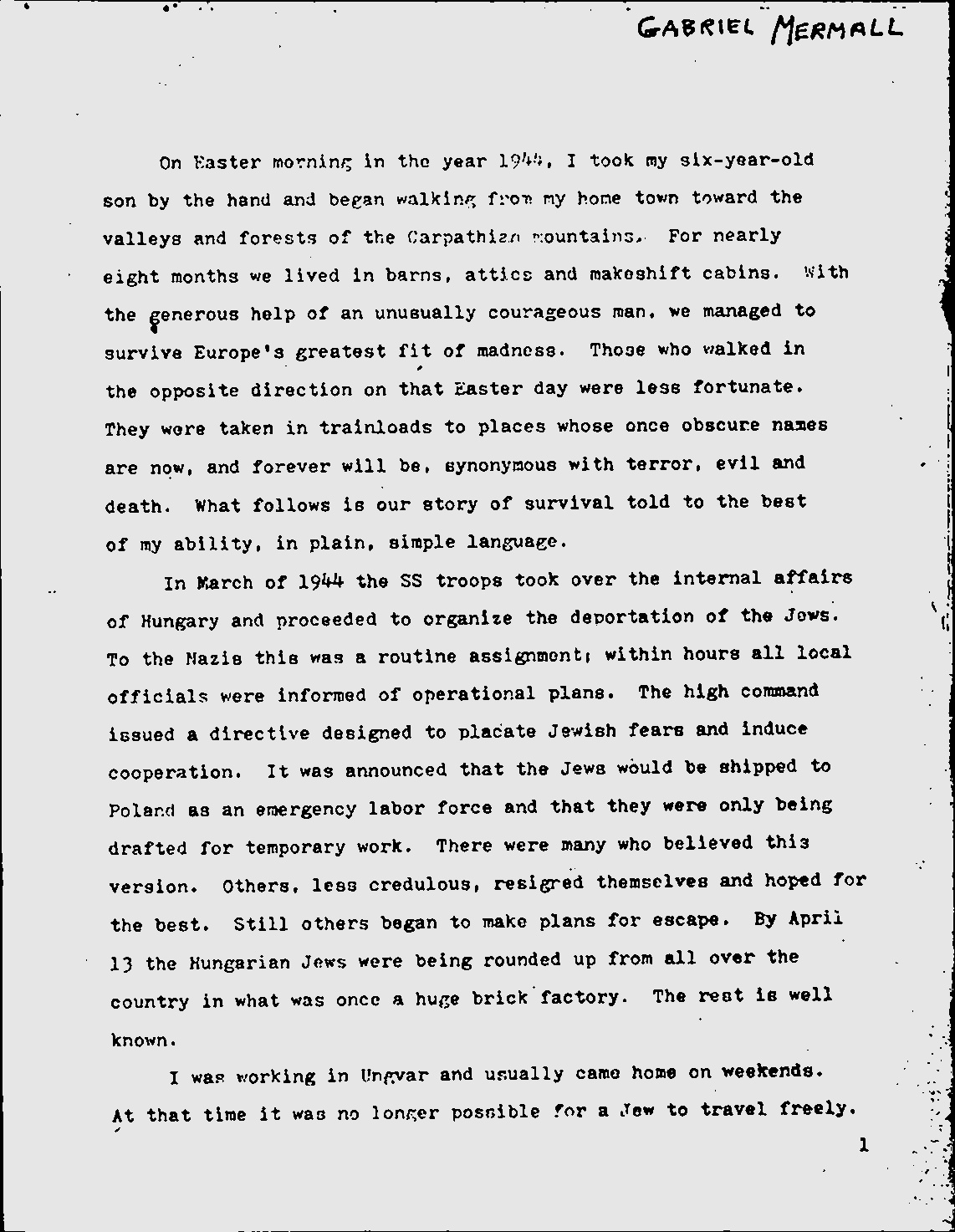

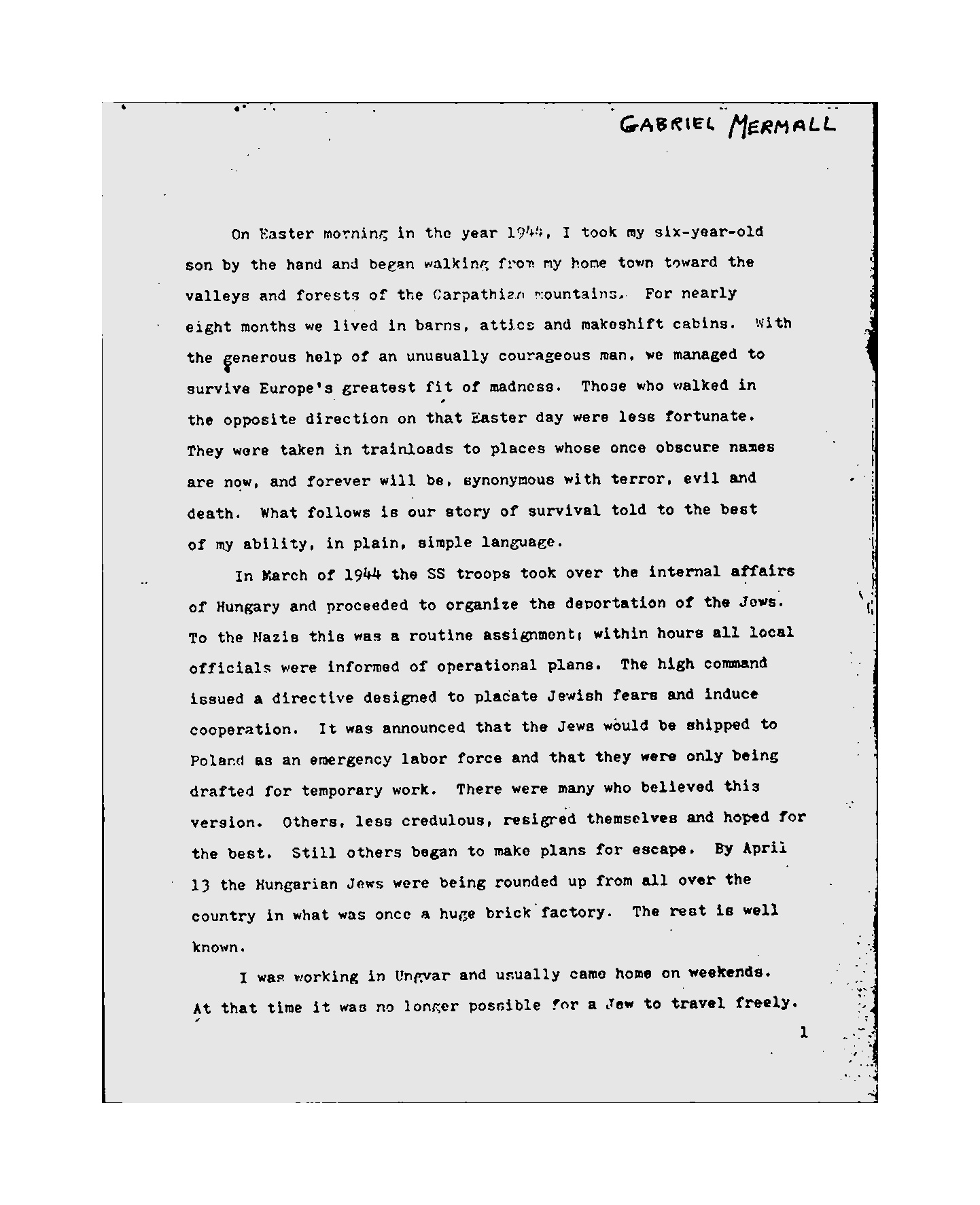

import cv2 import pytesseract from PIL import Image img_file = "../data/ocr/page_01.jpg" no_noise = "../data/temp/no_noise.jpg" img1 = Image.open(img_file) display(img1) ocr_result1 = pytesseract.image_to_string(img1) print(ocr_result1) img2 = Image.open(no_noise) display(img2) ocr_result2 = pytesseract.image_to_string(img2) print(ocr_result2) """ “GABRIEL Meamall On Easter movning in the year 1944, I took my six-year-old son by the hand and began walking fron my home town toward the valleys and forests of the Carpathizn mountains. For nearly eight months we lived in barns, attics and makeshift eabins. With the gene nous help of an unusually courageous man, we managed to survive Europe's greatest fit of madness. Those who walked in the opposite direction on that Easter day were lese fortunate. They were taken in trainloads to places whose once obscure names are now, and forever will be, synonymous with terror, evil and death. What follows is our story of survival told to the best of my ability, in plain, simple language. In March of 1944 the SS troops took over the internal affairs of Hungary and proceeded to organize the deportation of the dows. To the Nazie thie was a routine assignment; within hours all local officials were informed of operational plans. The high command issued a directive designed to placate Jewish fears and induce cooperation. It was announced that the Jews would be shipped to Poland as an emergency labor force and that they were only being drafted for temporary work. There were many who believed this version. Others, less credulous, resigred themselves and hoped for the vest. Still others began to make plans for escape. By Aprii 13 the Hungarian Jews were being rounded up from all over the . country in what was once a huge brick factory. The rest is well known. : J was working in Ungvar and usually came home on weekends. At that time it was no longer possible for a Jew to travel freely. """

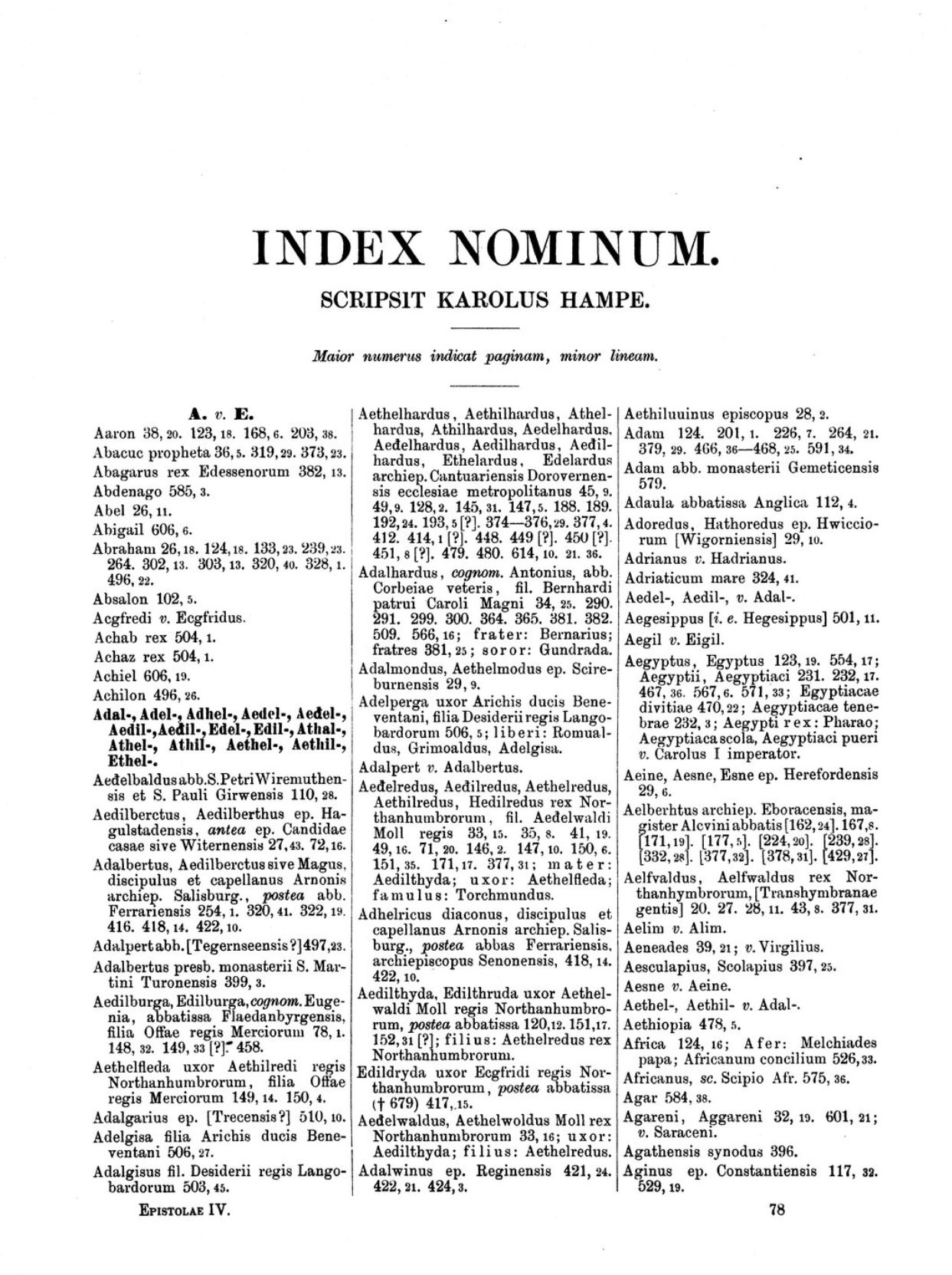

OCR an Index with PyTesseract

https://github.com/1sitevn/python-jupyter/blob/main/ocr/04_OCR_an_Index_with_PyTesseract.ipynb

import cv2 import pytesseract from PIL import Image image_file = "../data/ocr/index_02.jpg" img = Image.open(image_file) ocr_result = pytesseract.image_to_string(img) print(ocr_result) lines = ocr_result.split("\n\n") for line in lines: temp_line = line.replace(",", "") if temp_line.isdigit(): pass else: components = [] segs = line.split(",") for seg in segs: seg = seg.strip() num = False for character in seg: if character.isdigit(): num = True if num == False: components.append(seg) print (components)

Bounding Boxes with OpenCV

https://github.com/1sitevn/python-jupyter/blob/main/ocr/05_OCR_Bounding_Boxes_with_OpenCV.ipynb

import cv2 import pytesseract from PIL import Image image = cv2.imread("../data/ocr/index_02.JPG") base_image = image.copy() gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) cv2.imwrite("../data/temp/index_gray.png", gray) blur = cv2.GaussianBlur(gray, (7,7), 0) cv2.imwrite("../data/temp/index_blur.png", blur) thresh = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1] cv2.imwrite("../data/temp/index_thresh.png", thresh) kernal = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 13)) cv2.imwrite("../data/temp/index_kernal.png", kernal) dilate = cv2.dilate(thresh, kernal, iterations=1) cv2.imwrite("../data/temp/index_dilate.png", dilate) cnts = cv2.findContours(dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = cnts[0] if len(cnts) == 2 else cents[1] cnts = sorted(cnts, key=lambda x: cv2.boundingRect(x)[0]) results = [] for c in cnts: x, y, w, h = cv2.boundingRect(c) if h > 200 and w > 20: roi = image[y:y+h, x:x+h] cv2.rectangle(image, (x, y), (x+w, y+h), (36, 255, 12), 2) ocr_result = pytesseract.image_to_string(roi) ocr_result = ocr_result.split("\n") for item in ocr_result: results.append(item) cv2.imwrite("../data/temp/index_bbox_new.png", image) print (results)

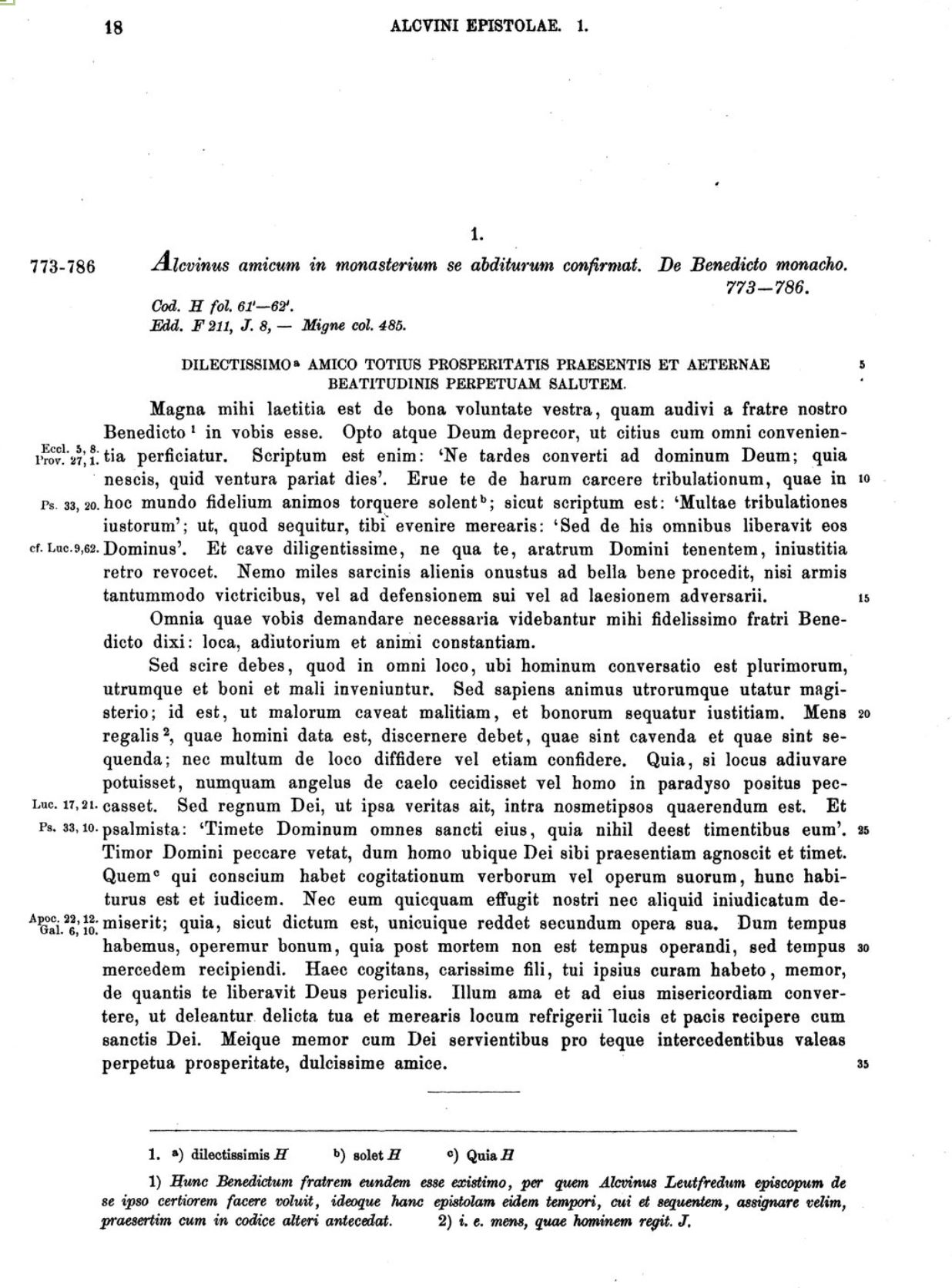

OCR a Text with Marginalia by Extracting the Body

import cv2 import pytesseract from PIL import Image image = cv2.imread("../data/ocr/sample_mgh.JPG") base_image = image.copy() gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) blur = cv2.GaussianBlur(gray, (7,7), 0) thresh = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1] kernal = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 50)) dilate = cv2.dilate(thresh, kernal, iterations=1) cv2.imwrite("../data/temp/sample_dilated.png", dilate) cnts = cv2.findContours(dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = cnts[0] if len(cnts) == 2 else cnts[1] cnts = sorted(cnts, key=lambda x: cv2.boundingRect(x)[1]) for c in cnts: x,y,w,h = cv2.boundingRect(c) if h > 200 and w > 250: roi = base_image[y:y+h, x:x+w] cv2.rectangle(image, (x,y), (x+w, y+h), (36, 255, 12), 2) cv2.imwrite("../data/temp/sample_boxes.png", image) ocr_result_original = pytesseract.image_to_string(base_image) print(ocr_result_original) ocr_result_new = pytesseract.image_to_string(roi) print(ocr_result_new)

Separate a Footnote from Body Text

import cv2 import pytesseract from PIL import Image image = cv2.imread('../data/ocr/sample_mgh_2.jpg') base_image = image.copy() gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) blur = cv2.GaussianBlur(gray, (7,7), 0) thresh = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1] # Create rectangular structuring element and dilate kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3,25)) dilate = cv2.dilate(thresh, kernel, iterations=1) # Find contours and draw rectangle cnts = cv2.findContours(dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = cnts[0] if len(cnts) == 2 else cnts[1] cnts = sorted(cnts, key=lambda x: cv2.boundingRect(x)[1]) main_text = "" for c in cnts: x,y,w,h = cv2.boundingRect(c) if h > 200 and w > 250: roi = base_image[y:y+h, 0:x] # cv2.rectangle(image, (0, y), (x, 0 + h+20), (36,255,12), 2) constant= cv2.copyMakeBorder(roi.copy(),30,30,30,30,cv2.BORDER_CONSTANT,value=[255,255,255]) ocr_result = pytesseract.image_to_string(constant) cv2.imwrite("../data/temp/output.png", roi) print (ocr_result) # print (ocr_result) # cv2.imwrite("temp/output.png", image)